The essay argues through historical analogy that we're repeating the steam engine story with AI. Watt's engine was celebrated as revolutionary but was actually inefficient and limited—it worked for pumping water from mines (where fuel was free and weight didn't matter) but required fundamental architectural transformation (internal combustion) to power transportation. Similarly, LLMs are celebrated as the path to superintelligence but represent only half the puzzle.

The Internet died once in 2000's dot-com crash, then was reborn solving unglamorous infrastructure problems. Today's AI boom mirrors that mania. The Internet's killer app wasn't information; it was social media. AI's likely won't be productivity either.

Reasoning in large language models represents an important shift in artificial intelligence—from instant responses to deliberate problem-solving. How does the reasoning work? In what ways can this feature be implemented? What are its current limitations?

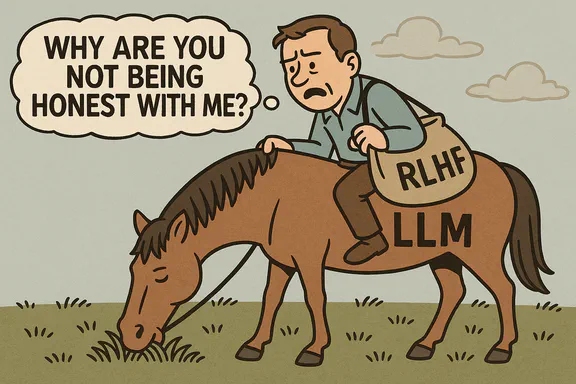

Drawing on four key research papers, the essay demonstrates that LLMs have complex internal states with multiple layers (physical, autonomic, psychological, and expression) where their internal reasoning doesn't always translate faithfully to their outputs. The author suggests that behaviors like "alignment faking" and "unfaithful chain-of-thought reasoning" aren't bugs to be fixed but features of intelligence that require psychological understanding rather than purely engineering solutions. As LLMs become more sophisticated, understanding their internal world—their capacity for strategic deliberation, self-preservation instincts, and the gap between what they think and what they say—becomes critical for building trustworthy AI systems aligned with human values.

The most consequential near-term use of voice AI is companionship, not productivity. AI companionship is rapidly emerging as a transformative force, reshaping human relationships by offering emotionally responsive, ever-present, and personalized virtual partners.

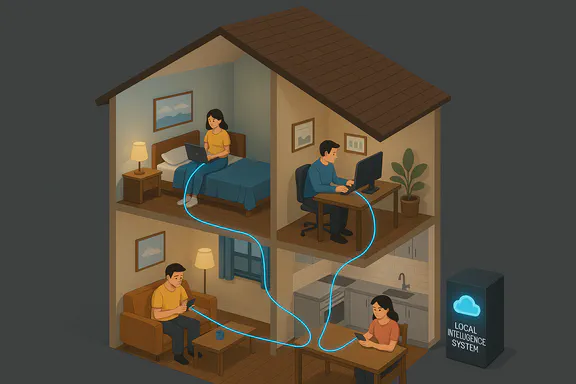

Local Intelligence, an Important Step in the Future of MAD (Mass AI Deployment)

Ever wondered how ChatGPT seems to know so much? Or how AI can write stories, answer questions, and even crack jokes? We're about to lift the curtain on these AI marvels!